Future Technologies Conference 2023: Thoughts and Reflections

“Nobody can predict the future. But, we have to try.”

In early November, I was invited to speak at the Future Technologies Conference (FTC) in San Francisco. I was grateful for the opportunity to take the stage and share my research, and equally grateful to be present and learn from others. The quote above was stated by the keynote speaker, Tom Mitchell, who opened the conference. While Mitchell’s words hold a certain truth, they also invite us to delve deeper into the intricate dance between anticipation and adaptability in our rapidly changing world.

Any time I think about the future, I am brought back to a Bolivian proverb which I learned from distinguished writer, sociologist, feminist, and activist Silvia Rivera Cusicanqui. The saying, roughly translated, goes like this:

“The future is at my back. I stand in the present, and all we can see is the past.”

It’s an idea that initially may seem foreign, but as it settles in, it reveals a profound wisdom. We can only tell what is likely to happen by what has already happened. In Western culture, we’re often conditioned to fixate on the future, perpetually reaching for it, striving to predict and shape what’s to come. However, the reality is that while we can make educated guesses, the future remains shrouded in uncertainty. The unpredictable nature of the future raises questions about the power of self-fulfilling prophecies.

As we delve into the realm of future technologies, the landscape is vast and bustling with innovation. Many breakthroughs are driven by predictive abilities, often harnessed for purposes related to commerce, such as the orchestration of emotional responses in marketing endeavors. We are in a time where technologies are being deployed at great speed with very few guardrails in place, disregarding potential consequences. It is essential to recognize that these powerful technologies are not solely defined by their applications, but also by what goes into the making of them, including raw materials and data.

The creators behind new technologies, such as some of the speakers at the conference, often have noble intentions, with a focus on critical global issues like climate change, sustainability, public health, and technologies that enhance the well-being of individuals. Nevertheless, there is a recurring pattern where technologies take unforeseen paths, diverging from their original intentions. This becomes particularly complex when dealing with formidable forces like artificial intelligence (AI) and virtual reality (VR). These technologies are developing incredibly rapidly, with nearly endless possibilities, along with a lot of ethical concerns.

The topics covered at FTC included:

Deep Learning

Large language models

Data Science

Ambient Intelligence

Computer Vision

Robotics

Agents and Multi-agent Systems

Communications

Security

e-Learning

Artificial Intelligence

Computing

In this post, I will give an overview of my experience at the conference, including the talk that I presented, and share some highlights from other presentations.

My Presentation

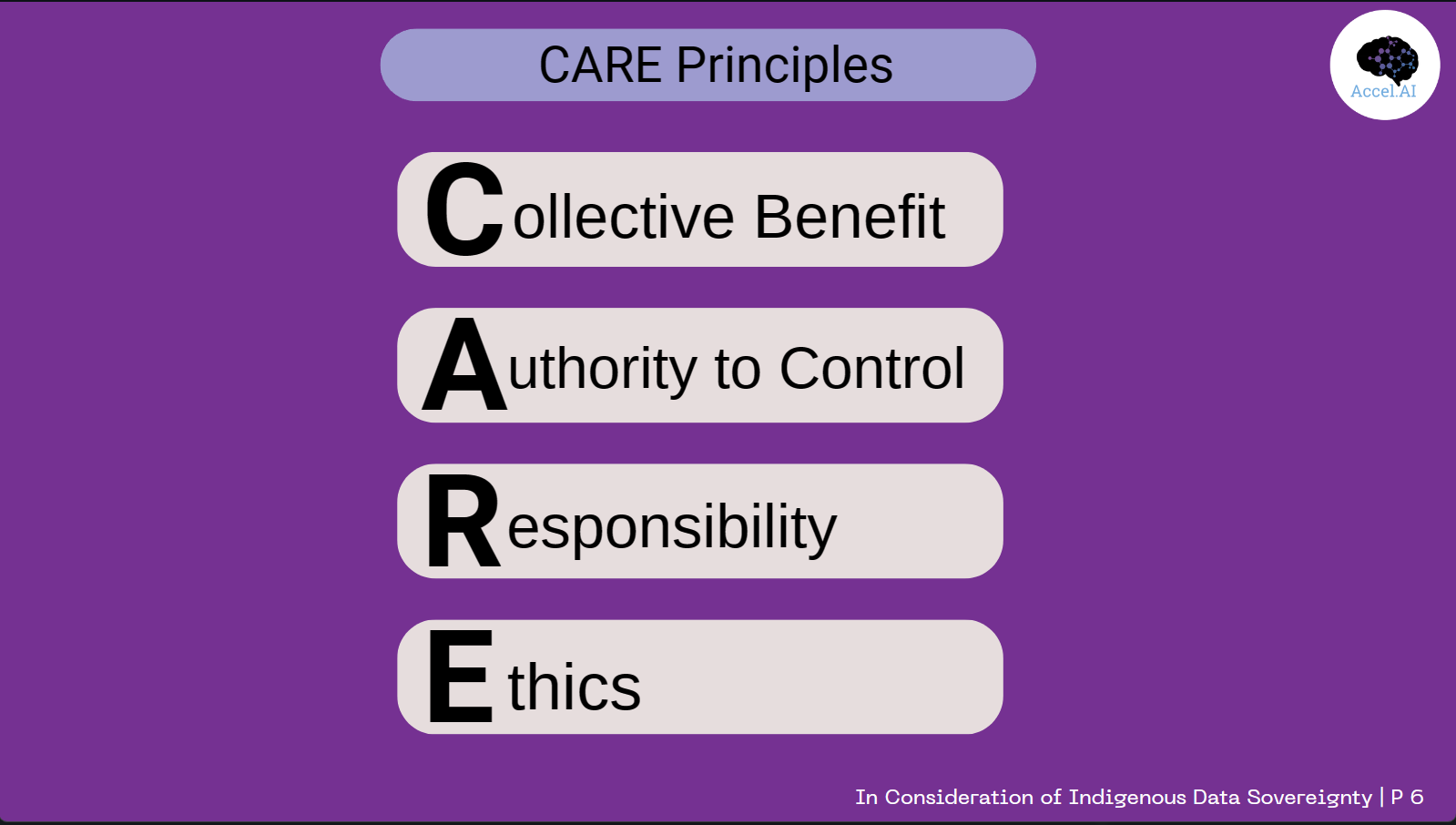

The Future Technologies Conference covered a wide range of topics and specialties, with mine falling under data science. My presentation focused on the topic of data mining, titled: “In Consideration of Indigenous Data Sovereignty: Data Mining as a Colonial Practice”. During my talk, I explained what Indigenous Data Sovereignty is and why it is important, before describing the CARE Principles of Indigenous Data Governance: Collective Benefit, Authority to Control, Responsibility, and Ethics.

To exemplify how the CARE principles could be applied throughout the data lifecycle, I reviewed a case study to first show how the above principles are often missing in data collection practices.

A European NGO went to Burundi to collect data on water accessibility.[1]

The NGO failed to understand:

1. The community’s perspective on the actual central issues

2. The potential harms of their actions

By sharing data publicly, which included geographic locations, the NGO put the community at risk.

Collective privacy was violated and there was a loss of trust.

The CARE principles were violated, particularly Collective benefit and Responsibility.

I closed the talk with some recommendations. There is a need to remediate three main data challenges: data collection, data access, and relevance in order to allow for access, use and control of Indigenous peoples’ own data and information.[2] It is vital to note that there are varying local concerns in different regions, although all have been negatively influenced and impacted by long standing exploitative colonial practices. It is imperative that we continue to educate ourselves and question broader narratives that stem from colonial roots.

It was apparent that many attendees hadn’t considered the approach I presented, yet it resonated with them. I hope I prompted the attendants to think about data from a different perspective and think about the people behind the data.

I was amazed with how well it was received and the feedback and questions that I got. One question was about the case study that I presented. I was asked how exactly the people in question were harmed by data collection that did not adhere to the CARE principles? I explained in more detail how the community in Burundi from the case study was harmed by researchers who ended up sharing their personal data including location data, which broke the community’s trust. This shows that privacy is not only a personal issue, but for Indigenous communities, privacy is often a collective issue. This might not be considered from a Western perspective, which views privacy as purely individualistic. By expanding our understanding of human values and how they can vary culturally and regionally, we can better understand how data collection and new technologies will affect different populations.

Afterwards, many people approached me and wanted to discuss my research further. The best comment I got was simply: “Your research is so rare.”

That is why I wanted to present this work at this particular conference on future technologies, because so much of it relies on data, and a lot of data. Data often comes from people, and it has a lot of value. Some say it is more valuable than any other resource. Most people benefit through convenience of the learnings and apps developed by the use of public data. However, why is it only corporations who benefit monetarily from everyone’s data? Why is this outright exploitation allowed? Isn’t it neo-colonialism at work? This is the message I was getting across.

Notable Talks at FTC

The talks ranged across the board of new and future technology, centering around AI, VR, and more. At lunch, I met Myana Anderson, who told me that she was speaking about bears. What her talk — Metabolic Adaptation in Hibernating American Black Bears — was about was how bears have something that humans don’t: the ability to be sedentary for long periods of time, in order to hibernate. For humans, our bodies are made to move, and if we are too sedentary we get blood clots and all sorts of health issues. Her and her fellow researchers studied blood samples from hibernating bears to see what exactly it is that allows bears to remain immoble and maintain homeostasis. They collected this data and studied it to see what we could learn to reflect on for treating a variety of sedentary-related human diseases, and people with conditions that worsen with immobility.

This was certainly unique and compelling research that could potentially benefit people with disabilities and illnesses who are immobilized. However, there is an aspect that worries me, in that, are we headed for a dystopia of immobility, where life is lived in VR and people turn into blobs and hibernate like bears, due to this research? This was not mentioned, but is purely my speculation. Is this really the direction we want to go in? Why can’t we find ways to keep up being mobile and active in our world? Or, would this research truly be used just to support people with conditions that forced them to be immobile, and not for the general population to be able to sit without moving for long periods?

It was interesting because it was far-removed from my own research, but I remain slightly worried about how it will be used. It was also interesting to consider a study that does not use data from humans, but from animals, and may necessitate the consideration of animal rights going forward.

Another of the talks which stood out to me was called The Effects of Gender and Age-Progressed Avatars on Future Self-Continuity, by Marian McDonnell. The research was on the use of VR to create age-progressed avatars, in an effort to make people have more empathy for their future selves and save more money for their retirements. The idea is that people find it easy to empathize and care for their parents and children, but not for themselves in the future. This is true, and they did find that this was effective in getting people to think about their retirement more and put money away for their own futures. However, the most interesting thing in this study was that there were differences for men versus women. When men were introduced via VR to an older version of themselves, they thought they looked like their father, and thought it was neat. When women did the same, they were shocked and terrified to see themselves aged.

Women and men are socially and culturally treated so differently with such different expectations, including around aging. Older women are not represented as having worth, rather, they are really quite invisible, where older men are shown as being still attractive, as well as having an air of respect that women are not afforded. This social conundrum became very clear through their study, and they made sure to include it as a vital part of the research, which I thought was notable. Repairing these inequalities might take more than VR, but might be an interesting body of research to approach. These deep-seated inequalities make themselves visible during projects such as this, and it presents an opportunity to address them in appropriate and creative ways.

Final Thoughts

Throughout the conference, there were instances where I hesitated to express my thoughts, observing a prevailing emphasis on valuing speed and sales, and a lack of responsibility and transparency considerations. While some discussions touched on the ethical dimensions of technology, particularly in environmental applications, the technical details often delved into intricacies beyond my social science expertise. It was an opportunity to work on my own knowledge development in technical areas, and share knowledge with others in adjacent fields. That is why in-person conferences are so vital, so that the knowledge shared can mesh together and those in attendance can come away with a better understanding of things that may have been overlooked.

As I sat in attendance, occasions arose where I wished to inquire about ethical considerations. In one of these moments, another participant raised a question about my same concerns, only to receive a response acknowledging a lack of expertise in that domain. I found this a bit concerning, however it highlights the necessity of safety and responsibility in what we are building now and in the future.

In addressing the rapid evolution of the present into the future, concerns inevitably arise. Rather than understanding these as worries, reframing them as foresight becomes crucial for establishing checks, balances, and comprehensive protections around emerging technologies. This includes considerations not only during implementation but also at the initial stages of the data lifecycle, ensuring safeguards at every level without causing unintended harm. The question persists: Can we mitigate potential harms associated with new technologies, or is some level of harm inevitable?

Presently, an opportune moment exists for integrating ethics into technological discourse. However, it is imperative to approach this integration with an awareness of historical and existing systemic contexts. This nuanced approach is essential to navigate ethical considerations in a manner that acknowledges the complexities of past and current systems.

References

[1] Abebe, Rediet, Kehinde Aruleba, Abeba Birhane, Sara Kingsley, George Obaido, Sekou L. Remy, and Swathi Sadagopan. “Narratives and Counternarratives on Data Sharing in Africa.” In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 329–41. Virtual Event Canada: ACM, 2021. https://doi.org/10.1145/3442188.3445897.

[2] Rodriguez, Oscar Luis Figueroa. “Indigenous Policy and Indigenous Data in Mexico: Context, Challenges and Perspectives.” In Indigenous Data Sovereignty and Policy, 130–47. Routledge Studies in Indigenous Peoples and Policy. Abingdon, Oxon ; New York, NY: Routledge, 2021