What is the connection between data governance, the environment, and the rights of Indigenous peoples?

In the intersection between rising technologies based on data and the need to protect the earth and all its peoples, we find Indigenous Data Sovereignty (IDS). Indigenous communities are disproportionately affected by extraction and exploitation practices throughout the world, which harms them and the earth. (IPCC, 2022) How can Indigenous Data Sovereignty help?

Before colonization, Indigenous peoples had sovereignty over their data, in forms such as art and storytelling, and one thing that links the diverse populations of Indigenous communities is their inherent connection to the natural world. These connections to data and the earth have been corrupted by colonization, which has historically legitimized and legalized the exploitation of land, resources, and the people themselves.

We highlight the example of mining metals from the earth to reflect the term data mining, as, on the surface, mining appears to be a part of our modern world and how we extract metals necessary for many things that we use daily, such as smartphones and computers; however, when we look closer, we can see the complexity and the harms that come to communities and the natural environment in this extraction process. Data mining is different from metal mining in several ways; one being that data is not something that occurs naturally, but that must be produced, by people. Data mining is often compared to extracting resources, or as a sort of modern-day land grab. (Couldry and Mejias, 2021) Metal mining also has negative implications for Indigenous data protection and protection of Indigenous peoples’ health and environment, a further implication of the colonial nature of the practice. IDS would help to mitigate harm in these areas.

In my last article, global data law was discussed, focusing on the inclusion of the digital Non-Aligned Movement and Indigenous Data Sovereignty. In this post, the connection to how we steer global data governance towards protecting the natural environment will be explored, examining case studies on metal mining in Mexico and exploitation of resources in Colombia. First, we will review the current status of the environment and climate change, including how data and law are used to help Indigenous communities in contemporary times.

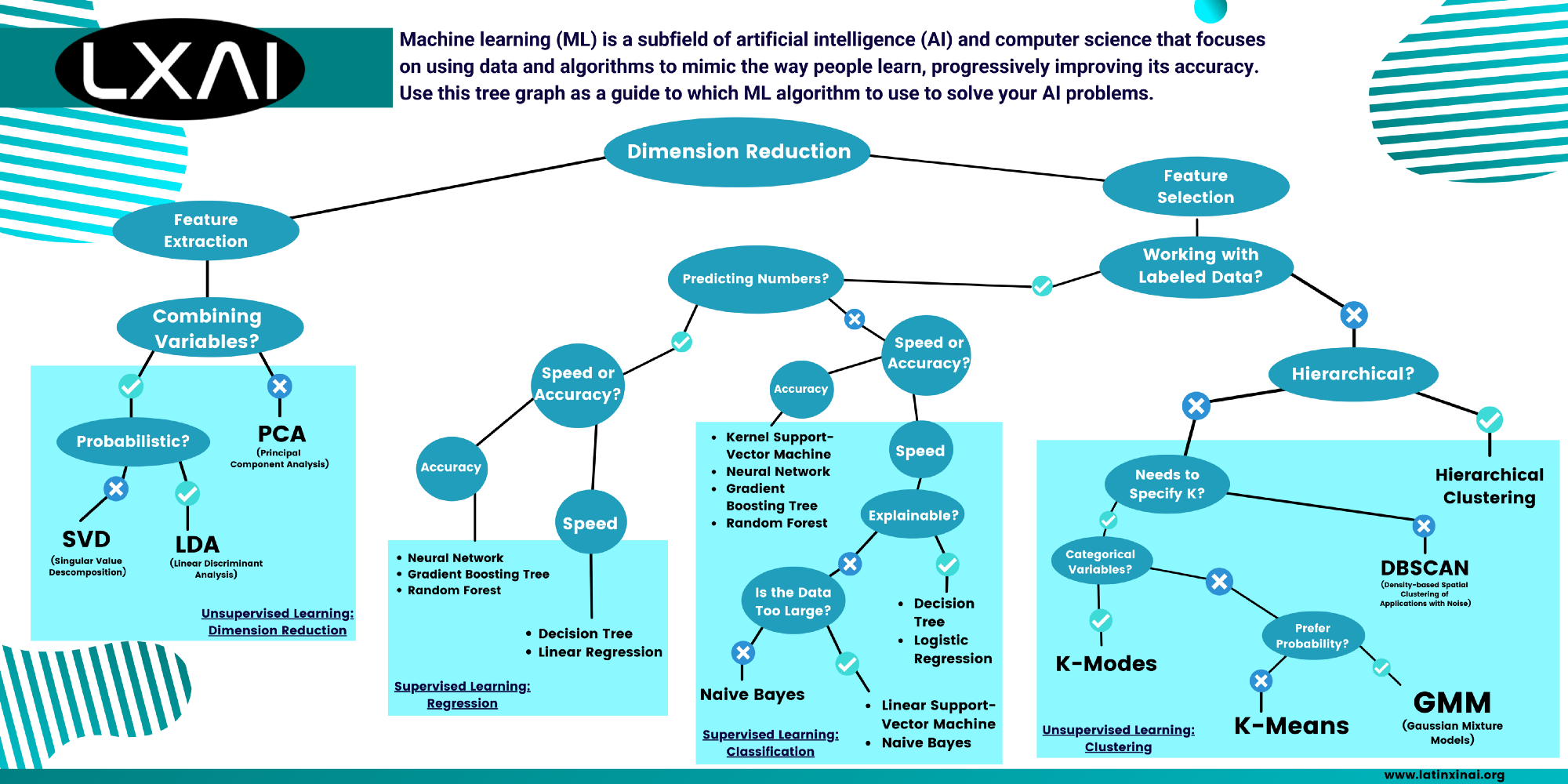

Tackling Climate Change with ML

The world is aware that we are in a climate crisis, and we have the technology and means to solve it. Microsoft Research published an article titled “Tackling Climate Change with Machine Learning” which addresses suggestions for switching to low-carbon electricity sources, lowering carbon emissions, removing CO2 from the atmosphere, and generally incorporating ML practices to improve standards for climate impact in the areas of Education, Transportation, Finance, Agriculture, and Manufacturing. (Rolnick et al, 2019) While, the recent UN report on climate change provides the data and research needed to turn the ship around if only policymakers and corporate powers can get on board and make serious changes, and soon. The report based its findings on scientific knowledge as well as Indigenous and local knowledge to reduce risks from human-induced climate change while evaluating climate adaptation processes and actions. (IPCC, 2022)

The path forward includes a lot of moving parts, and I would like to highlight the importance of Indigenous rights in this process. Indigenous ideologies are based on the core tenet that there is really no separation between people and the earth. On the other hand, governments and laws globally have a long colonial history of treating land as a commodity to be exploited for profit, endorsed by development discourses. (Rojas-Páez and O’Brien, 2021)

I want to stress the importance of mitigating undue harm to Indigenous peoples when applying data and machine learning technologies to help the planet. Sometimes when we set out to do good, it ends up hurting people unintentionally and reproducing colonial constructs. It is a delicate balance when outside researchers approach issues where they think they know the answers and what is best for others, but if we collaborate efforts and take responsibility for our shared planet, spending more time listening and less prescribing, I think that ultimately is what helps all people the most.

The Native American Rights Fund (NARF) stands to protect the rights of indigenous populations in the US, and their current environmental work primarily concerns climate change. On the international level, NARF has represented the National Tribal Environmental Council and the National Congress of American Indians (NCIA) via the United Nations Framework Convention on Climate Change, ensuring the protection of indigenous rights in international treaties and agreements governing greenhouse gas emissions. NARF represents tribes in court cases, such as in a case representing Alaskan Native tribes against energy companies for damages. They also help tribes relocate when necessary, as the impact of climate change in Alaska is immense and immediate. (NARF, 2019) NARF uses the law to help tribes in America, standing against those who benefit from exploiting their lands and resources.

Environmental problems including climate change, habitat destruction, mining wastes, air pollution, hazardous waste disposal, illegal dumping, and surface/groundwater contamination cause an array of health and welfare risks to Indigenous peoples. NARF is one organization helping tribes protect the environment as a top priority and helps to enforce laws such as the Safe Drinking Water Act, the Clean Air Act, and the Clean Water Act. (NARF, 2019)

NARF has not yet helped to enforce laws around data protection and mining practices for Indigenous people. These laws are nascent in their development and governments globally are only beginning to find consensus on their implementation. NARF is well-positioned to lead the way or join the movement that is beginning to ensure indigenous data, history, land, and representation is protected in the digital future.

NARF is well-positioned to lead the way or join the movement that is beginning to ensure indigenous data, history, land, and representation is protected in the digital future.

Indigenous data policy and case studies in Mexico and Colombia

Indigenous Data Sovereignty (IDS) is not only relevant but necessary for creating fairer governance and a more prosperous future for Indigenous peoples (Rodriguez, 2021 P.139), as was shown repeatedly throughout the book, Indigenous Data Sovereignty and Policy (2021). We will rely on two case studies from the book to exemplify this further and connect them to climate change and environmental concerns.

The chapter by Oscar Luis Figueroa Rodriguez focused on IDS in Mexico and examines unsolved priorities from history that involve the use of data and the particular way that information and knowledge have been generated and transformed, controlled, and exploited across many contexts, indicating a need for IDS. (Rodriguez, 2021 P.139) Another chapter of the book highlighted Colombia’s struggle with IDS (Rojas-Páez and O’Brien, 2021), and introduced a 2019 ruling (the Jurisdicción Especial para la Paz “Special Jurisdiction for Peace” or JEP) which declared nature to be a victim of Colombia’s conflict, pointing to affected ecosystems including rivers and the need for them to gain legal protection. (Rojas-Páez and O’Brien, 2021)

Access to resources such as water is globally justified to be controlled selectively and exclusively, as nature is commodified, however, Indigenous narratives stand in opposition to this. (Johnson et al., 2016) (Rojas-Páez and O’Brien, 2021) By legally protecting rivers and other ecosystems, historically normalized exploitation practices must come to an end. A century ago, in the 1920s, the implementation of large-scale economic projects led to the legitimization of direct violence against Indigenous peoples, for example, in the Amazon and northern region of Santander, oil and rubber plantations resulted in several Indigenous communities’ disappearance, through enslavement and assassination. (Rojas-Páez and O’Brien, 2021)

This wasn’t a practice of Indigenous data mining but of erasure. Not only were Indigenous peoples and their knowledge misrepresented, but they were being wiped out. The only interest at the time was on what resources could be extracted from the land and how much it was worth. As there is now more of an interest in collecting Indigenous data as another form of resource mining, IDS holds great importance in regard to Indigenous communities for it stands to mitigate “. . . demands for territorial rights, food sovereignty and access to natural resources such as water.” (Rojas-Páez and O’Brien, 2021)

Indigenous worldviews which hold that humans are a part of the land and cannot be separated from it have been undermined by the ideology that land is a commodity to be exploited for economic purposes. Rojas-Páez and O’Brien bring up the question, “. . . why is the human cost of the expansion of the extractive economy not challenged in countries whose Indigenous communities are still facing extermination?” (2021) The authors turn to scholar Julia Suarez-Krabbe, who commented on the invisibility of the impact of colonial practices in places like Colombia and explained that “. . . the force of colonial discourse lies in how it succeeds in concealing how it establishes and naturalizes ontological and epistemological perspectives and political practices that work to protect its power” (Suárez-Krabbe, 2016). (Rojas-Páez and O’Brien, 2021) However, recent rulings like the JEP work to recognize Indigenous ontologies, how their data is represented, and to protect the land.

‘The protection of personal data is a constitutional and fundamental right in Colombia’ according to Carolina Pardo, Partner in the corporate department of Baker McKenzie in Colombia. Her article, Colombia Data Protection Overview in DataGuidance, references the Congress of Colombia enacted Statutory Law 1581 of 2012, which Issues General Provisions for the Protection of Personal Data (‘the Data Protection Law’), which ‘develop the constitutional right of all persons to know, update, and rectify information that has been collected on them in databases or files, and other rights, liberties, and constitutional rights referred to in Article 15 of the Political Constitution.’

Indigenous inspectors have been named to monitor natural resources on reservations since 1987. In 1991, Colombia approved their new Constitution recognizing Indigenous rights, including ethnic and cultural diversity, languages, communal lands, archeological treasures, parks and reservations which they have traditionally occupied; and measures to adopt programs to manage, preserve, replace and exploit their own natural resources. (University of Minnesota Human Rights Library, 1995)

The Colombian government’s efforts and commitments to strengthen the dialogue on human rights have been recognized by political figures of the European Union. Patricia Llombart, Colombia’s EU Ambassador, stated that Colombia has shared values with the EU and is seen as a reliable and stable partner. Where the EU has been involved, international agreements which include protecting Indigenous rights as well as labor rights and rights for children have been signed in Andean countries. (Blanco Gaitan, 2019)

Turning now to the Mexican chapter, we see the same history echoed. Without the knowledge or permission of local indigenous peoples, external actors have historically conducted research to better understand the values of natural resources in Indigenous territories, demonstrating a lack of understanding of the implications of exploiting things such as minerals, timber, wildlife, plants, and water for the people who live there, in terms of health and environmental consequences, infrastructure, and investments. (Rodriguez, 2021 P.140–141)

For example, extractive practices such as mineral mining profoundly impact Indigenous communities and have only been promoted by recent presidents in Mexico. In the last 12 years, 7% of Indigenous territories have been lost for the sake of mining alone, frequently without even informing Indigenous communities. (Valladares, 2018, p. 3) (Rodriguez, 2021 p.140–141)

Mining metals from the earth is necessary for much of the technology we know and love today, however, there is a price to pay, and I am not referring to the cost of the latest iPhone. The major cost falls on the people who live where these metals are extracted, or, where they used to live if they had their territories taken away for the purpose of mining. (Rodriguez, 2021 P.140–141)

We use mining as an example, which clearly shows the need for IDS and the consequences, as communities were neither considered nor informed about the extremely invasive methods and exploitation techniques involved in metal mining from their land in Mexico, including not only the use of heavy machinery but massive lixiviation processes mainly with sodium cyanide, which several European countries have forbidden. (Boege, 2013). (Rodriguez, 2021)

January of this year saw a vote by the Mexican Congress to approve the Federal Law for the Protection of the Cultural Heritage of Indigenous and Afro-Mexican Peoples and Communities. (Hermosillo and Soria, 2022) This law includes protecting Indigenous communities and their rights to property and collective intellectual property, traditional knowledge and cultural expressions, including cultural heritage, in an “. . . attempt to harmonize national legislation with international legal instruments on the matter, trying to give a seal of ‘inclusivity’ to minorities” (Schmidt, 2022)

“Intangible cultural heritage is defined as the uses, representations, expressions, knowledge, and techniques; together with the instruments, objects, artifacts, and cultural spaces that are inherent to them; recognized by communities, groups, and, in some cases, individuals as an integral part of their cultural heritage.” (Schmidt, 2022)

These new and relevant definitions, such as “cultural heritage,” “misappropriation,” and “collective property right” are helpful to guide third parties on identifying whether authorization is necessary for use of Indigenous or Afro-Mexican cultural heritage, as failure could result in infringements and/or felonies under Mexican law. (Hermosillo and Soria, 2022)

We can see how the representation of cultural heritage in both of these examples, in Mexico as well as Colombia, has gained importance and legal protection, which is vital to the conversation about data, as cultural heritage represents data about a collective. This further relates to protecting the natural environment, because by protecting cultural heritage, the lands and natural resources of Indigenous communities are also protected.

There is still a place for Indigenous Data Sovereignty as the laws change to protect Indigenous rights.

“IDS could help fill the gap regarding the lack of evaluations as an appropriate approach in the design and implementation of monitoring, evaluation, and learning (MEL) local systems, controlled and used by Indigenous communities.” (Rodriguez, 2021 p.143)

Rodriguez went on to list recommendations from the Organization for Economic Co-operation and Development (OECD) to move forward on these issues.

The OECD recommends four main areas to strengthen Indigenous economies:

1. improving Indigenous statistics and data governance

2. creating an enabling environment for Indigenous entrepreneurship and small business development at regional and local levels

3. improving the Indigenous land tenure system to facilitate opportunities for economic development

4. adapting policies and governance to implement a place-based approach to economic development that improves policy coherence and empowers Indigenous communities

(OECD, 2019, p. 5) (Rodriguez, 2021 p.143)

Lists like this are helpful, however, they must be approached with caution and in communication with the people which they aim to help. These steps must be implemented by Indigenous peoples themselves with the support of organizations such as the OECD.

Through exploring these case studies from Mexico and Colombia, it is clear that in considering public policies for data governance for Indigenous peoples, there is a need to remediate three main data challenges: data collection, data access, and relevance in order to access, use and control their own data and information. (Rodriguez, 2021 P.144) This is something that must be understood for data governance around the world, and to note that there are different local concerns in different regions, but which have all been negatively influenced and impacted by long-standing colonial and exploitation practices. It is important that we continue to educate ourselves and question broader narratives that stem from colonial roots.

You can stay up to date with Accel.AI; workshops, research, and social impact initiatives through our website, mailing list, meetup group, Twitter, and Facebook.

www.accel.ai

Join us in driving #AI for #SocialImpact initiatives around the world!

References

Blanco Gaitan, D. (2019, July 2). Challenges of Colombian data protection framework towards a European adequate level of protection. DUO. Retrieved April 24, 2022, from https://www.duo.uio.no/handle/10852/68578

Boege, E. (2013). El despojo de los indígenas de sus territorios en el siglo XXI. Movimiento Mesoamericano Contra el Modelo Extractivo Minero. Retrieved from https://movimie ntom4.org/2013/06/el-despojo-de-los-indigenas-de-sus-territorios-en-el-siglo-xxi/.

Nick Couldry & Ulises Ali Mejias (2021): The decolonial turn in data and technology research: what is at stake and where is it heading?, Information, Communication & Society, DOI: 10.1080/1369118X.2021.1986102

Cunsolo Willox, A., Harper, S. L., & Edge, V. L. (2013). Storytelling in a digital age: digital storytelling as an emerging narrative method for preserving and promoting indigenous oral wisdom. Qualitative Research, 13(2), 127–147. https://doi.org/10.1177/1468794112446105

IPCC, 2022: Summary for Policymakers [H.-O. Pörtner, D.C. Roberts, E.S. Poloczanska, K. Mintenbeck, M. Tignor, A. Alegría, M. Craig, S. Langsdorf, S. Löschke, V. Möller, A. Okem (eds.)]. In: Climate Change 2022: Impacts, Adaptation, and Vulnerability. Contribution of Working Group II to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change [H.-O. Pörtner, D.C. Roberts, M. Tignor, E.S. Poloczanska, K. Mintenbeck, A. Alegría, M. Craig, S. Langsdorf, S. Löschke, V. Möller, A. Okem, B. Rama (eds.)]. Cambridge University Press. In Press.

Johnson, H., Nigel, S., and Reece, W. (2016). The commodification and exploitation of fresh water: property, human rights, and green criminology. Institutional Journal of Law, Crime and Justice, 44(2016), 146–162.

NARF. (2019, January 25). Protect tribal natural resources. Native American Rights Fund. Retrieved April 8, 2022, from https://www.narf.org/our-work/protection-tribal-natural-resources/

OECD. (2019). Linking Indigenous Communities with Regional Development, OECD Rural Policy Reviews. Paris: OECD Publishing. doi:10.1787/3203c082-en.

Pool, I. (2016). Colonialism’s and post-colonialism’s fellow traveller: the collection, use and misuse of data on indigenous peoples. In: Indigenous Data Sovereignty Toward and Agenda. ANU Press.

Rodriguez, O. L. F. (2021). Indigenous policy and Indigenous data in Mexico. In Indigenous data sovereignty and policy (pp. 130–147). essay, ROUTLEDGE.

Schmidt, L. C. (2022). New general law for the protection of cultural heritage of Indigenous and Afro-Mexican peoples and communities in Mexico. The National Law Review. Retrieved April 24, 2022, from https://www.natlawreview.com/article/new-general-law-protection-cultural-heritage-indigenous-and-afro-mexican-peoples-and?msclkid=8dc1a6c6c39511ec987d4aa0940a1ab5

Suarez-Krabbe, J. (2016). Race, Rights and Rebels: Alternatives to Human Rights and Development from the Global South. Lanham: Rowman and Littlefield.

Valladares, de la C.L.R. (2018). El asedio a las autonomías indígenas por el modelo minero extractive en México. Iztapalapa. Revista de Ciencias Sociales y Humanidades, 85(39), 103–131, julio-diciembre de 2018. ISSN:2007–9176. Retrieved from http://www.scielo .org.mx/pdf/izta/v39n85/2007–9176-izta-39–85–103.pdf.

University of Minnesota Human Rights Library. Minneapolis, Minn. :University of Minnesota Human Rights Center, 1995. Retrieved from http://hrlibrary.umn.edu/iachr/indig-col-ch11.html